Dorian Chan

I'm an AI Resident at Apple working on computational photography. I recently received my PhD from the Computer Science Department at Carnegie Mellon University, where I was advised by the wonderful Matthew O'Toole. I've also previously worked as a research intern at Meta Reality Labs Research and Snap Research.

My research focuses on rethinking the conventional camera in order to improve real-world robustness, reduce computation time and potentially even capture phenomena traditionally invisible to the human eye. In short, I think deeply about the underlying physical limits of modern camera hardware, and how computational tools can be used to fill the gaps. This joint perspective inspired my work on extremely cheap high-speed cameras, far more robust and energy-efficient LiDAR systems, cameras that can see vibrations, and more. My work has been recognized with the Best Paper Honorable Mention Award at CVPR 2022, and 1st place at the SIGGRAPH 2020 Student Research Competition. I have also served as a reviewer for CVPR, ICCV, ECCV, TOG, ICCP and TCI.

I quite enjoy fishing, and on occasion I try to make music. I'm also a big Denver Nuggets fan.

drop me a line: dorianychan@gmail.com

Some of my past work:

We explore the perceptual impacts of time-multiplexed holographic displays. While traditional architectures suffer from significant blurring and strobing effects, we show that a paradigm based on treating a traditionally time-multiplexed holographic system as a noisy high-speed display produces artifact-free output.

We explore how to build next-generation projectors that are capable of programmably projecting unique content at multiple planes via precisely-controlled laser light. This never-before-seen capability enables new interfaces where users see different content at different distances, and novel methods for depth sensing. Building such a system requires careful modeling, accomplished using AI-driven tools to capture the complexities of holographic light transport.

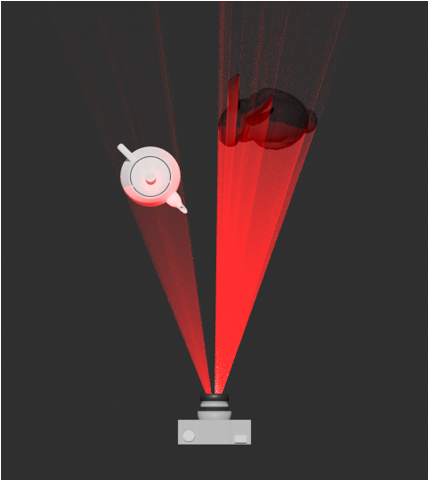

We build new light sources for LiDAR systems, that programmably concentrate light using the unique properties of laser light. By intelligently redistributing light according to priors on scene content, e.g., dark versus bright objects, we can build new depth sensors that have far increased depth and dynamic range compared to traditional devices. In our experiments, our system can display patterns at 2700x the brightness of a traditional approach.

We demonstrate a new methodology towards motion deblurring by designing a time-varying point-spread function. Along with an appropriate compressed sensing-based optimization algorithm, we can recover high-speed 192kHz videos (2x improvement over past work), suitable for tasks like motion capture, particle-image velocimetry and ballistics.

We show how the tiny vibrations captured by our previously proposed "seeing-sound" camera can be used to potentially localize human-object interactions. For instance, we can identify where a ping pong ball bounces on a table, or where a user steps in a room --- all without needing to directly see the aforementioned event.

We construct a simple camera system for "seeing sound", using just a laser, a rolling-shutter and a global-shutter camera (a far cheaper configuration than standard high-speed cameras). We can use this system to separate sound sources in a room, reconstruct human voices that vibrate a bag of chips and visualize the vibrations of a tuning fork.

We demonstrate an approach for depth-selective imaging, where a camera only sees objects at a predefined distance away via holographically-manipulated laser light. Such a system enables new applications in human-robot safety, privacy-preserving cameras and optical touchscreens.

We explore the limits of "seeing around corners", by showing that a circular LiDAR scan of points can be effectively used for non-line-of-sight imaging via the projection-slice theorem or a careful construction of ADMM. Our work demonstrates explicit connections between NLOS and computed tomography, a relationship that has long been speculated upon but never before been proven.

We create a new framework for high-dynamic range tonemapping, that uses modern gradient-descent tools to optimally match the image perceived on a standard monitor with a desired HDR scene in-the-wild. Our approach improved perceptual quality by 4% over the previous state-of-the-art in a user study.

We explore how electroencephalography devices can be used to control virtual reality devices with a thought.

We develop a new methodology for digitizing real-world materials for later rendering using just mobile phone videos.